Joint Optimization of Task Caching,Computation Offloading and Resource Allocation for Mobile Edge Computing

Zhixiong Chen ,Zhengchuan Chen,3 ,Zhi Ren ,Liang Liang,2 ,Wanli Wen,2 ,Yunjian Jia,2,*

1 School of Microelectronics and Communication Engineering,Chongqing University,Chongqing,400044 China

2 Chongqing Key Laboratory of Space Information Network and Intelligent Information Fusion,Chongqing,400044 China

3 National Mobile Communications Research Laboratory,Southeast University,Nanjing,210096 China

4 School of Communication and Information Engineering,Chongqing University of Posts and Telecommunications,Chongqing,400065 China

Abstract: Applications with sensitive delay and sizeable data volumes,such as interactive gaming and augmented reality,have become popular in recent years.These applications pose a huge challenge for mobile users with limited resources.Computation offloading is a mainstream technique to reduce execution delay and save energy for mobile users.However,computation offloading requires communication between mobile users and mobile edge computing(MEC)servers.Such a mechanism would difficultly meet users’ demand in some data-hungry and computation-intensive applications because the energy consumption and delay caused by transmissions are considerable expenses for users.Caching task data can effectively reduce the data transmissions when users offload their tasks to the MEC server.The limited caching space at the MEC server calls for judiciously decide which tasks should be cached.Motivated by this,we consider the joint optimization of computation offloading and task caching in a cellular network.In particular,it allows users to proactively cache or offload their tasks at the MEC server.The objective of this paper is to minimize the system cost,which is defined as the weighted sum of task execution delay and energy consumption for all users.Aiming at establishing optimal performance bound for the system design,we formulate an optimization problem by jointly optimizing the task caching,computation offloading,and resource allocation.The problem is a challenging mixed-integer non-linear programming problem and is NP-hard in general.To solve it efficiently,by using convex optimization,Karmarkar’s algorithm and the proposed fast search algorithm,we obtain an optimal solution of the formulated problem with manageable computational complexity.Extensive simulation results show that in comparison to some representative benchmark methods,the proposed solution can effectively reduce the system cost.

Keywords: mobile edge computing;computation offloading;caching;resource allocation

I.INTRODUCTION

With the rapid proliferation of mobile devices and the development of wireless communication technology,more and more novel and sophisticated applications are emerging,such as face recognition,augmented reality,and interactive gaming,which require a large amount of computation resources and energy[1].However,mobile devices are usually resourceconstrained due to limited computation capability and battery capacity.To cope with the confliction between cost and demand,computation offloading has attracted significant attention.With computation offloading,mobile users can offload their computation tasks to devices with adequate computation resources [2,3].This would effectively alleviate the computational resource requirements for mobile users.Among multiple computation offloading methods,mobile edge computing(MEC)is a promising selection which has been widely discussed by the industry and academia in recent years[4].The concept of MEC was firstly proposed by the European Telecommunications Standard Institute(ETSI)in 2014 and was defined as a new platform that provides information technology and cloudcomputing capabilities within the radio access network in close proximity to mobile subscribers[5].

Equipped with MEC servers on the edge of the network,mobile edge computing provides users with a short delay and high-efficient computing services.It is possible for mobile users to offload their computation tasks to the MEC server which has adequate computation resources[6].The authors in[7]investigated a collaborative approach based on MEC and cloud computing that offloads services to automobiles in vehicular networks to improve the system utility and reduce the computation time.A joint task offloading and resource allocation scheme has been proposed to maximize the users’ task offloading gains,which is measured by a weighted sum of reductions in task completion time and energy consumption[8].The authors in [9] considered the task offloading problem in the ultra-dense network,which led to a low delay offloading scheme while saving the battery life of the users’equipment.In[10],the authors studied the resource allocation for a multiuser MEC-enabled network based on time-division multiple access(TDMA)and orthogonal frequency-division multiple access (OFDMA),which led to an energy-efficient offloading scheme.A two-tier computation offloading framework in heterogeneous networks has been proposed to address the joint computation offloading and user association problem [11].The authors in [12] developed a novel user-centric energy-aware mobility management scheme for mobile edge computing in an ultra-dense network.In[13],the authors investigated the computation offloading problem of the coexistence and synergy between fog computing and cloud computing in the Internet of Everything (IoE).The authors in [14]constructed a queue model-based mobile users’workload offloading problem and utilized the Lyapunov optimization framework to make a trade-off between system offloading utility and queue backlog.To cope with the uncertain features of mobile users’ demands for resources in computation offloading,the authors in [15] proposed to leverage logarithmic utility functions to capture the mobile users’satisfaction and designed a resource reservation algorithm to avoid overreservation and overdemand resource scheduling.

While computing offloading benefits the computation-intensive users,it still cannot meet the users’ demand for delay and energy consumption in some specific applications due to the uncertainty of the communication channel.The authors in [16]leveraged computation replication in task offloading to reduce the download latency in multi-user multi-server MEC networks,which is an efficient scheme to cope with the downlink channel suffering severe fading and interference.A learning-based task replication algorithm has been proposed in [17],which exploited the abundant computing resources on vehicles to improve the delay performance and service reliability in a vehicular edge computing system.In [18],the authors designed a centralized task replication algorithm to maximize the probability of completing a task before the deadline in a dynamic vehicle environment.

Since computation offloading requires users to upload both the user task input parameters and the corresponding program that processes it [19—21],data caching was proposed as a promising technology for further improving the delay performance and reducing energy consumption in the MEC network.The task data caching can be classified into computation content caching and computation service caching[19,20].In computation content caching,the MEC server or user side caching the input parameters and computation results,while computation service caching means caching the program data for executing computation tasks.In [19],the authors studied the problem of a single edge server that assists a mobile user to execute a sequence of computation tasks,and designed an alternating optimization method to minimize the computation delay and energy consumption of the mobile user.The author in [20] proposed an MEC service pricing scheme to coordinate with the service caching decisions and control users’ task offloading behavior in a cellular network.The authors in [22] studied joint service caching and task offloading for MECenabled dense cellular networks and developed an efficient online algorithm.A collaborative offloading scheme has been proposed to cache the popular computation results that are likely to be reused by other mobile users to minimize the execution delay for mobile users [23].In [24],the authors investigated the problem of joint optimization of task caching and offloading on the edge cloud with the computing and storage resource constraint and developed an effective algorithm to achieve high energy efficiency of the mobile devices while meeting the users’ demand for delay.The authors in [25] studied the dynamic service caching problem in mobile edge networks,which minimize the traffic load that needs to be forwarded to the cloud,while service switching cost of base stations is also taken into account.In [26],the authors investigated the joint optimization of service placement and request routing in MEC-enabled multi-cell networks with storage,computation and communication constraints.

Task caching or offloading schemes in the above literature are mainly based on two models,i.e.,binary model[22—24]and continuous partial model[10,11].In binary model,a task cannot be partitioned into multiple subtasks.For continuous partial model,a task can be divided into infinite subtasks according to any real ratio of input size between zero and one.In fact,it is valuable to note that a task can only be divided into a limited number of subtasks based on its attributes.Besides,the previous task caching schemes directly cache the computation results [22—26].This would lack flexibility and restrict the system performance,because the executive results of one task with different input parameters may be distinct in practical scenarios.Finally,in order to make full use of computing resources of the MEC server,computing resources of the MEC sever should be allocated to each user according to the user’s caching and offloading strategy,instead of a fixed allocation in[22—24].

Motivated by this,we consider an MEC-enabled cellular network where all the tasks can be divided into multiple subtasks and can be executed by local computing or MEC computing.As stated in our conference paper[27],we introduce a task caching-based MEC framework to assist the task computing,where the MEC server can cache the executive codes of tasks to provide computation services for mobile users.Unlike the previous works in [19,20],we consider a task caching assisted multi-user computation offloading scenario,where the computing resource allocation,task caching and computation offloading decisions are jointly optimized.Aiming at establishing the optimal performance bound for system design,we propose a centralized scheme,where the MEC server is assumed to have all the knowledge for tasks’computing scheduling.Specifically,we formulate the problem as a joint resource allocation,task caching and computing offloading problem,in which the storage capacity and computation capability of the MEC server are all taken into account.We first address the computation resource allocation problem by using the convex optimization technique.Then,we propose to find the optimal summation of task caching decision and computation offloading strategy through detailed analysis.Finally,we address the computation offloading problem using a fast search algorithm and obtained the optimal task caching scheme.By comparing the proposed scheme and other three benchmark methods,it is found that the proposed joint tasking caching,computation offloading and resource allocation scheme can effectively reduce the system cost.

The main contributions of this paper are summarized as follows.

? Since computation offloading requires users to upload both the user task input parameters and the corresponding program that processes it,we introduce a MEC-based task caching framework.The MEC server can cache the task programs of tasks to provide computation services for mobile users.This framework allows users to just upload input parameters to request the MEC server to execute their tasks without uploading task programs.It can substantially improve computing performance in comparison with the traditional MEC mechanism.

? Aiming at establishing the optimal performance bound for system design,we exploit three technologies,i.e.,task caching,computation offloading,and resource allocation to minimize the system cost,which is defined as the weighted sum of the task execution delay and energy consumption for all users.The problem is formulated as a non-linear mixed integer programming problem,in which the computing capability of the server,the storage capacity are all taken into consideration.

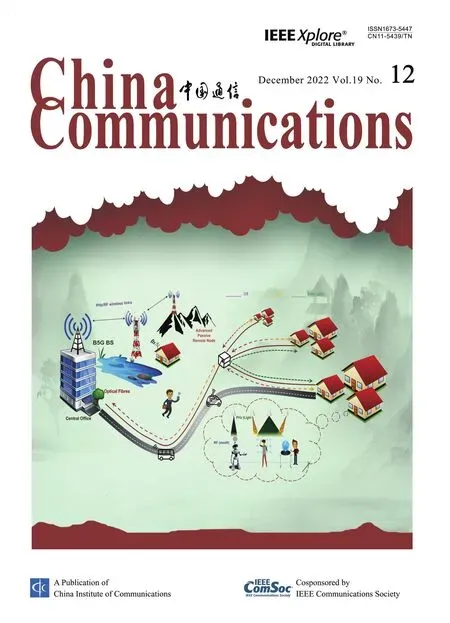

Figure 1.MEC-enabled cellular network system.

? By observing the structure of the original problem,we propose to find the global optimal solution by three steps,through which we have obtained the optimal computing resource allocation decision,the optimal task caching scheme and the optimal computation offloading solution.Specifically,we first solve the resource allocation problem by using convex optimization method.Then,we provide an effective algorithm to find the summation of number of tasks to be cached and offloaded based on analysis of the optimization problem.Finally,we establish the optimal task caching decision and computation offloading strategy in polynomial time by using a fast search algorithm.

? We conduct extensive simulations to evaluate the performance of our proposed scheme.By comparing the proposed scheme with other three benchmark schemes,it is found that the proposed scheme can significantly reduce the system cost for a wide range of parameter settings.

The rest of this paper is organized as follows.In Section II,we introduce the system model and formulate the joint resource allocation,task caching and offloading problem as a system cost minimization problem.In Section III,we propose an optimal scheme to solve the original problem.Section IV verifies the effectiveness of the proposed scheme by extensive simulations.The conclusion is drawn in Section V.

II.SYSTEM MODEL

2.1 Network Model

We consider an MEC-enabled cellular network,as shown in figure 1.There is one base station (BS) in the network serving forKusers,whose index set is denoted byK={1,2,···,K}.The BS is equipped with an MEC server to provide computation offloading and storage services to the users such as phones,wearable devices and tablets.The users are randomly distributed in the BS’s coverage and each of them has a computationally intensive task to be completed.For ease of presentation,we refer to userkand taskkinterchangeably.We consider that userkhas a computational taskQk,that is data-oriented and can be separated into multiple subtasks and computed in a distributed manner[28—30],such as components rendering in 360?VR video display and voice-to-text conversion [28].It enables that a portion of the subtasks can be offloaded to the MEC server to process while the remaining part is processed locally by the user itself.Each computation taskkis characterized by a tuple of three parameters,i.e.,whereSkrepresents that the total number of CPU cycles required to accomplish the taskk,Mkis the number of subtasks of taskk,andμdenotes the task program data size of each subtask.In particular,we assume that the number of CPU cycles required for a subtask is linearly related to the ratio of its task program data size and the total task program data size,that is,the required cycles of one subtask of taskkis

2.2 Communication Model

It is considered that the network is based on the orthogonal frequency division multiple access(OFDMA)in whichKusers are separated in the frequency domain with equal bandwidthB.Note that,using such uplink transmission technology implies that the users do not interfere with one another.LetPkdenote the transmission power of userk.Denote byHkthe channel gain between userkand the BS.Besides,we assume during the task offloading duration,the user mobility is not high.Hkis a constant due to low user mobility and quasi-static fading.Consequently,the achievable uplink rate for userkcan be characterised by Shannon capacity,i.e.,

whereσ2is the variance of complex white Gaussian channel noise.We can obtain the transmission delay that userkoffloads its taskQkto the MEC server as follows:

Accordingly,the corresponding transmission energy consumed by userkfor offloading its taskQkto the MEC server can be expressed as

2.3 Task Computing Model

When userkneeds to execute its task,it can accomplish the task through local computing,offloading to the MEC server,and requesting executive by the MEC server.For clarity,we elaborate the above three ways in the following:

? Local Computing.Denote the computing capability(i.e.,CPU cycles per second)of userk(k ∈K)bywhich is assumed to be a fixed value.When userkchooses to execute its task by local CPU,the local execution time delay for userkis given by

Since the energy consumption is proportional to the square of the frequency of the user device[31,32],we can further establish the corresponding energy consumption of taskkbeing executed locally as follows:

whereζrepresents the energy coefficient,which depends on chip architecture.

? Offloading to the MEC server.When userkoffloads the computation taskQkto the MEC server,it will compete for computing resources of the MEC server with other users.LetfCrepresent the computational capability of the MEC server.Denote byβk(0≤βk ≤1,?k ∈K)the fraction indicator of the CPU cycles of the MEC server allocated to userk.Letαk(k ∈K)represent the number of subtasks of taskkbeing offloaded to the MEC server.According to the communication model,the total delay that userkoffloads its task to the MEC server can be expressed as

where the first term on the right-hand side(RHS)is the transmission delay that userkuploads its offloaded task programs,the last term on the RHS is the computing delay that the subtasks of taskkoffloaded to process at the MEC server.Similar to many studies such as [8] and [23],we ignore the transmission delay for sending the task results from the MEC server to users.This is because the output data size after processing is generally far smaller than its task program data size,and the downlink rate from the BS to devices is higher than the uplink rate from devices to the BS.In the offloading to the MEC server case,it is noted that the energy consumed by userkis given by(3).

? Request for MEC server’s execution.Because computation offloading requires communication between users and the BS,it would bring extra transmission delay and energy consumption for users.In fact,servers are usually equipped with large storage hardware.If the executive codes and description of some commonly used subtasks are stored at the server,users can call those tasks with a few necessary input and initialization parameters.Motivated by this,we introduce an efficient way to process computation tasks,which is to precache a part of subtasks in the MEC server.When a user needs to execute its task,it can first check whether the MEC server has stored some of its subtasks.Once some subtasks are confirmed in the MEC server storage space,the user can upload the input parameters of these subtasks and request the MEC server to execute the subtasks directly without uploading executive codes.Then,the MEC server returns the computational results of these subtasks to the user.LetCrepresent the storage capacity of the MEC server and letxkdenote the number of subtasks of taskkbeing cached in the MEC server’s storage space.According to the introduced MEC computing way,the delay that userkrequests for executing its subtasks just depends on the task execution delay(without task programs uploading delay),which is

Note that we neglect the delay for uploading input parameters.This is because the size of input parameters is much smaller than that of executive codes.Besides,we consider that the energy consumption of userkis zero due to that the receiver’s energy consumption is far less than the energy consumption of other stages.

It is valuable to note that userk(?k ∈K) can execute its task through three methods,i.e.,local computing,offloading to the MEC server,and requesting executive by the MEC server.Local computing means that userkexecute their tasks through its own device.Offloading to the MEC server means that userkneed to upload task input parameters and task programs to the MEC server for task computing.Requesting executive by the MEC server means that userkjust need to upload the corresponding input parameters to the MEC server and utilize the cached task programs for task computing,without task programs uploading procedure.It is no doubt that userkshould select requesting executive by the MEC server method rather than offloading to the MEC server method when the corresponding task programs are cached,because the computing cost of requesting executive by the MEC server is smaller than that of offloading to the MEC server.When task programs of taskkare cached,userkcan accomplish its task through local computing,offloading to the MEC server,and requesting executive by the MEC server.However,when the corresponding task programs are not cached,userkcan accomplish its task through local computing and offloading to the MEC server,it cannot execute its task through requesting executive by the MEC server.

2.4 Problem Formulation

In this paper,we aim at minimizing the system cost,which is defined as the weighted sum of energy consumption and execution delay for all users under limited storage capacity and computing capabilities of the MEC server.To this end,we focus on the following three techniques:

? Task caching technique.Caching tasks provides the possibility for processing the requests for users by the MEC server directly.That is,the uploading step could be omitted with the help of task caching.Accordingly,the delay and energy consumption of users execute tasks can be further reduced.The task caching strategy is denoted asX=[x1,x2,···,xK],wherexkrepresents the number of subtasks of taskkbeing cached in the MEC server.

? Task offloading technique.When userkneeds to execute its task,it should decide how many subtasks being offloaded to the MEC server.The task offloading strategy is denoted asα=[α1,α2,···,αK],whereαkrepresents the number of subtasks of taskkbeing offloaded to the MEC server.

? Computing resource allocation technique.At the BS,when users offload tasks to the MEC server or request the MEC server to execute its task,the MEC server should allocate the computing resources for each user.The resource allocation strategy is denoted asβ=[β1,β2,···,βk],whereβkis the fraction indicator of the CPU cycles of the MEC server allocated to userk.

Based on the above definition ofX,αandβ,we can express the delay of userkfor executing its task as

where the first part in the right-hand side (RHS) is computing delay of cached subtasks,the second part in the RHS is the computing delay of offloaded subtasks,the third part in the RHS is the transmission delay,the last part in the RHS is the computing delay of local computing subtasks.Since the offloaded subtasks and the cached subtasks are executed through the same computing resource block at the MEC server.Thus,the processing of offloaded subtasks and cached subtasks are sequential.Besides,similar to many previous works [10,33],we assume that the local computing process is sequential with the MEC server computing process.

The corresponding energy consumption can be expressed as

To incorporate the effect of execution delay and energy consumption,we define the weights of computational delay and energy consumption asvTandvE,respectively.vT+vE=1,(vT,vE∈[0,1]),and define the system cost as

The objective is to minimizef(X,α,β) by jointly adjusting the task caching strategyX,the task offloading decisionαand the computing resource allocationβ.So far,we can formulate the optimal system cost problem as

In problemP,(11a) corresponds to the storage capacity constraint,which means that the total cached task programs data size cannot exceed the storage capacity of the MEC server.(11b) represents that the sum of all computing resources allocated to users is not greater than the available computing capability of the MEC server.(11c) ensures that the number of cached subtasks at the MEC server would not exceeds the total subtasks number of each task.In other words,the MEC server would not store repetitive subtasks for each task.(11d) prevents users to upload their subtasks that have already existed in the MEC server memory,guaranteeing that users would not offload redundant subtasks for their task computing.Constraint(11e)restricts the computing resource allocated to each user.Since the problem contains continuous variables and integer variables,the problem is a mixed integer non-linear programming problem and finding the optimal solution usually requires exponential time complexity[34].

III.OPTIMAL JOINT RESOURCE ALLOCATION,TASK CACHING AND COMPUTATION OFFLOADING

In this section,we solve the optimal solution of problemPand establish the optimal performance bound for system design by decomposing it into three subproblems and solving them one by one.

3.1 Optimal Computing Resource Allocation

In this subsection,we consider to establish the optimal computing resource allocation for any fixed feasible task offloading decisionα(0)and task caching decisionX(0)which satisfy constraints(11a),(11c)and(11d).Using the expression of the system cost in (10),we can write the objective function of resource allocation problem as follows:

In(12),the first part of the RHS is a function forβ,the second part of the RHS is a constant regardless ofβ.Thus,we can simplify the objective function of this resource allocation problem as the following function:

Sequentially,we can formulate the resource allocation problem as

Theorem 1.The optimal computing resource allocation βk (?k ∈K)for problem P1are given by

Proof.See Appendix A.

Up to now,we have obtained the optimal computing resource allocation policy which is a function ofXandα.We plug the optimalβin objective function (10)and get the following objective function:

Accordingly,we can reformulate problemPas the following equivalent optimization problem.

It is valuable to note that the conversion from problemPto problemdoes not change the optimality of the solution of problemP.

3.2 Optimal Summation of Task Caching and Computation Offloading

In this subsection,we determine the optimal sum of task caching decision and computation offloading strategy for each user.To proceed in the analysis of task offloading and caching design,let us define a variabletk=xk+αk(?k ∈K).Consequently,the objective function(16)can be converted as

wherettt=[t1,t2,···,tK].Up to now,we can use alternative optimization method to obtain the optimaltttandα.Then,we usexk=tk ?αkto find the optimal task caching decisionX.For problemwe first solve the optimal solution ofttt,and then substituting the optimaltttinto problemfor constructing the computation offloading problem and solving the optimalα.Removing the constant items that are irrelevant totttin(18),we can formulate the problem of optimizingtttas follow.

where the objective function ofP2is

One should note that in problemP2,we omit the constraint (22a).This is because that the computation offloading decisionαkalways can ensure thattkranges from 0 toMk,the constraint(22a)does not narrow the value range oftk.

Since problemP2is a discrete and non-linear optimization problem which is difficult to find the closedform solution.Below we transferP2into an equivalent linear programming problem based on some analysis and develop an algorithm which can find the optimal task summation of caching and computation offloading.

Removing the integer constraint of problemP2,the objective function is a continuous derivable function ofttt.Denote this continuous function as(0≤tk ≤Mk,tk ∈R,?k ∈K).We can get the following result.

Lemma 1.The continuous functionis a concave function.

Proof.See Appendix B.

According to Lemma 1,the minimal value ofis at the boundary point.That is to say,the optimalψ(ttt) is at the boundary point.Hence,tkis either 0 orMk.Up to now,we can use an exhausted search method to find the optimalttt.However,the time complexity is 2K,which is impractical in practical applications.To derive the optimal solution of problemP2in polynomial time,we introduce a binary vectorθ=[θ1,θ2,...,θK],whereθkis given by

Consequently,the objective function(24)can be written as

Thus,we can reformulate problemP2as the following equivalent problem.

It is obvious thatθkθh=min(θk,θh) anddue toθk,θh ∈{0,1}(?k,h ∈K).For ease of analysis,we introduce a variable,i.e.,zzz={zk,h: 1≤k Sequentially,we can reformulate problemas the following equivalent linear integer programming. Particularly,the integer constraints lead to high complexity for solving problemNoting that each row in the coefficient matrix corresponding to Constraint(29a)and Constraint(29b)contains a‘-1’and a‘1’,it is a unimodular matrix.In accordance with Theorems in[35],the solution of the linear programming relaxation of problemis integer solution.Consequentially,we can transfer problemas the follow-ing equivalent linear programming problem. Up to now,we can obtain the optimal solution of linear problemby Karmarkar’s algorithm [36] in polynomial time.Consequently,the optimal solution of problemP2is found in polynomial time.For clarity,we summarize the detailed steps of task offloading algorithm as Algorithm 1.In particular,according to[36],the complexity of finding the optimal solution isonO(L)digit numbers. Algorithm 1.Task sum of caching and computation offloading search algorithm(vT,vE,fk,fC,ζ,Sk,K). In this subsection,we find the optimal computation offloading schemeα?.Denote the optimal solution obtained from Algorithm 1 byttt?=Pluggingttt?in the objective function(18),we can get the following function expression with an only argumentα: Up to now,problemPhas been converted into a task offloading problem.Note that the last three terms in function(31)are constant in terms ofα.For ease of analysis,we omit the last three terms in the objective function of task offloading problem and reformulate the task offloading problem as follows. where it has It is straightforward to see that the objective function (33) is a monotonically increasing function with respect toαk.Besides,the objective of problemP3is to minimize Λ(α).Thus,we have the following result on the optimalα. Based on the above analyses,we can derive the optimal solution of problemP3.Since the objective function is a monotonically increasing function,one should let the users who possess small coefficient offload their tasks as much as possible.Firstly,we sort the users based on their coefficientThen,we set the users’ offloading decisionαktoMkfrom the first user until the summation of users’offloading variable exceedsFor clarity,the detailed steps of computation offloading algorithm are summarized as Algorithm 2.With some calculations,one can see that the computational complexity of finding the optimal computation offloading decisionαisO(K2). Remark 1.Through the above analysis,we can get the optimal ttt and α by using Algorithm 1 and Algorithm 2,respectively.In this paper,the BS will responsible for solving the optimization problems of resource allocation,task caching and computation offloading.To get the optimal resource allocation decisions,task caching and computation offloading strate-gies,the BS needs to collect information from users,including tasks size,computing capabilities,delay,energy consumption preference parameters,and transmission power.Firstly,the BS construct the optimization problem of ttt (i.e.,problem),and find the optimal ttt based on Algorithm 1.Then,the BS construct the optimization problem of α(i.e.,problem P3),and find the optimal α based on Algorithm 2.Next,the BS compute the optimal X based on tk=xk+αk,?k ∈K.Finally,the BS substitute the optimal task caching decision X and the optimal computation offloading strategy α into theorem 1 to get the optimal computing resource allocation scheme.Based on the above analysis,the time complexity of finding the global optimal solution of problem P is O Algorithm 2.Computation offloading algorithm(vT,vE,μ,K). In this section,we evaluate the proposed scheme by comparing its performances with that of other three benchmark schemes.The first benchmark scheme is a joint task caching and local computing scheme where corresponding computation offloading is not used.The second scheme is a local computing scheme,in which all users execute computation tasks on their own devices locally.The last scheme is joint computation offloading and local computing scheme where task caching is not adopted.We refer to these three schemes as Local+Caching,Local Computing and MEC Offloading,respectively. In the simulations,we consider a single cell thatKusers are randomly distributed over a 200m×200m region and the base station is located at the center of the region.The uplink transmission bandwidth is set toB=3MHz and the transmission power of all users are set toPk=0.5W.According to the realistic measurements in [31],we set the energy coefficientζas 5×10?27.The white Gaussian noise variance is assumed to beσ2=2×10?13.In addition,the channel gain is modeled asHk=127+30×logdk,wheredkis the distance between userkand the BS [12].The number of subtasks for each task,i.e.,Mk,is uniform randomly selected in [1,Mmax].In terms of computing resources,we assume that the CPU capability of the MEC server and each user are 50 GHz and 1 GHz,respectively.The required CPU cycles for computing taskk,i.e.,Sk,is randomly selected in[1,Smax]Gigacycles. figure 2 shows the system cost of the proposed and three benchmark schemes for the different number of users.It is clearly seen that along with the increase of user number,the system cost of the four schemes keeps increasing.Besides,when the device number is small (less than 25),the system cost of the proposed scheme is almost the same as Local+Caching scheme.This is because the total data size of all tasks is small,and the MEC server can store all these tasks.Along with the increases of devices number,the MEC server cannot store all tasks,the system cost of Local+Caching is greater than the proposed scheme.When the devices number increase to exceed a certain number(almost 30),the system cost of Local+Caching scheme exceeds MEC offloading.The reason is that the storage memory of the MEC server is filled,the Local+Caching scheme must execute tasks that not existed in the MEC server by local computing.In contrast,the MEC Offloading scheme still can execute tasks by using MEC server whose cost is lower than local computing.In general,the system cost of our proposed scheme is significantly suppressed compared with the other three benchmark schemes.This comes from the zero cost brought by proactive task caching and low cost brought by computation offloading. Figure 2.Comparison of system cost against different number of users.C=2Gigabytes,μ=50 Megabytes,Mmax=5,Smax=5,vT=0.5. Figure 3.Average energy consumption with different number of mobile users.C=2Gigabytes,μ=50 Megabytes,Mmax=5,Smax=5,vT=0. Figure 4.Average task execution delay with different number of mobile users.C=2Gigabytes,μ=50 Megabytes,Mmax=5,Smax=5,vT=1. The effect of the number of users on the average energy consumption and the average task execution delay is illustrated in figure 3 and figure 4,respectively.In figure 3,we set the user preference parameter of delayvTto zero,which represents the objective of the original problem is to minimize the average energy consumption.It is observed in figure 3 that the proposed scheme,the MEC offloading scheme,and the caching scheme can effectively reduce the energy consumption of the system compared with the local computing scheme.Besides,it can be seen that the average energy consumption of the proposed scheme is significantly lower than that of the other three schemes.In comparison,the user preference parameter of delayvTis set to one in figure 4,which represents the objective of the original problem is to minimize the average task execution delay.It can be seen from figure 4 that the average task execution delay of the proposed scheme and the Local+Caching scheme are smaller than that of the local computing scheme and the MEC offloading scheme.Besides,when the device number is small,the average task execution delay of the proposed scheme is the same as that of Local+Caching.This is because the MEC server can store all tasks of users.It is straightforward to see that the proposed scheme outperforms Local+Caching scheme when the device number increases to over 20,this performance gap is brought by computation offloading.Overall,we can observe that the proposed scheme can significantly reduce the average task execution delay from figure 4.figure 5 and figure 6 present how the system cost of different schemes vary with the task profiles,i.e.,subtask input size and maximum task load,respectively.It can be seen that the system cost of all schemes increases with both the task input size and maximum task workload.This implies that the tasks with small input sizes and low workloads benefit more from caching and offloading than those with large input sizes and high workloads do.Moreover,it is observed that the proposed scheme can effectively reduce the system cost compared with the other three schemes.This benefit is brought from the rational use of storage and computing resources. Figure 5.Comparison of system cost against different value of task workload.C=2Gigabytes,μ=50 Megabytes,K=10,Mmax=5,vT=0.2. Figure 6.Comparison of system cost against different value of subtask input size.C=2Gigabytes,μ=50 Megabytes,K=10,Smax=5,vT=0.2. Figure 7.Comparison of system cost against different user transmission power.C=0.5Gigabytes,μ=50 Megabytes,K=10,Mmax=5,Smax=5,vT=0.2. The effect of the user transmission power is presented in figure 7.It is observed that the system cost increases with power.This because the large transmission power conducts high energy consumption when users offload task to the MEC server.Although the transmission rate can be improved with large power,the system cost produced by the corresponding transmission energy consumption is still much larger than the system gain brought by the delay reduction.Besides,it is clearly seen that the proposed scheme outperforms the other three scheme. The relationship between the system cost and the capacity of the MEC server is illustrated in figure 8.It can be seen that the system cost of the proposed scheme and the caching scheme are decreasing along with the increase of capacity value.This is because along with the increase ofC,users get more lowcost resources to handle their tasks.WhenCis large enough,the MEC server can store all contents,and all tasks can be executed at the lowest cost.The cost curve of the proposed scheme coincides with the curve of the caching scheme.Similar to other simulation results,the performance of the proposed scheme is better than the other benchmark schemes.The reason behind is that the proposed scheme considers storage and computing resource comprehensively.figure 9 and figure 10 illustrate how the average energy consumption and delay change with the user preference to delayvT,which is varying from zero to one and always satisfyvT+vE=1(?k ∈K).It is observed that the average energy consumption increases and the average time delay decreases along with the increases ofvT. Figure 8.Comparison of system cost against different storage capacity of the MEC server.μ=50 Megabytes,K=10,Mmax=5,Smax=5,vT=0.2. Figure 9.Comparison of task execution delay against different user preference parameter to delay.C=1 Gigabytes,μ=50 Megabytes,Mmax=5,Smax=5. Figure 10.Comparison of average energy consumption against different user preference parameter to delay.C=1 Gigabytes,μ=50 Megabytes,Mmax=5,Smax=5. In addition,the difference among the average time delays under a different number of users is shrinking along with the increase ofvT.Combining the above analysis results,the emphasis on higher latency results in that the system is more inclined to choose high energy and low latency local computing.Moreover,the users experience a larger average delay and energy consumption in crowd system.This is because when there are more users competing for the limited resources,the opportunity that a user can benefit from caching or offloading its task is lower.It is worth to n note that there is a sudden change in average delay when user preference from 0 to 0.1.This is because the objective of the original problem is to minimize energy consumption whenvT=0 and do not consider to optimize delay.WhenvTchange to 0.1,the objective is the weighted sum of delay and energy consumption,it optimizes the computing resource allocation decision to reduce delay.According to the presented simulation results,the proposed scheme outperforms the other benchmark schemes and can significantly reduce the system cost.The majority of the performance improvement comes from the full use of the storage and computing resources of the MEC server.It is valuable to note that task caching only requires a low price for the user.In the other benchmark schemes,devices do not consider jointly taking advantage of the storage and computing resources.In particular,whenCis large,the performance of the proposed algorithms become extremely well. We have investigated the joint computing resource allocation,task caching and computation offloading problem in MEC-enabled wireless cellular networks.The optimization problem is formulated as a system cost minimization problem which aims at minimizing the weighted-sum of task execution delay and energy consumption for all users.We use task caching to provide users with a very low-cost computing solution.Besides,users also can offload tasks to the MEC server to reduce system cost.Since the problem is a mixedinteger non-linear program which is difficult to solve,we decompose the original problem into three subproblems through analyzing the characters of the problem and the structure of the optimal solution,i.e.,computing resource allocation problem,task caching and computation offloading problem,where the computing resource allocation problem is a convex optimization problem,we solve it by using KKT conditions.Besides,we proposed an effective algorithm to find the summation of task caching decision and computation offloading strategy by transferring the problem to a linear programming problem which can be solved by Karmarkar’s algorithm in polynomial time.Finally,we addressed the computation offloading problem by a low-complexity algorithm and establish the optimal task caching strategy based on the obtained optimal summation of task caching and computation offloading decision.It is shown by extensive simulations that the proposed scheme can significantly decrease the system cost since it harnesses the advantage of task caching and computation offloading in saving energy and reducing delay. ACKNOWLEDGEMENT This work was supported in part by the National Natural Science Foundation of China under Grant 61971077,Grant 61901066,in part by the Project Supported by Chongqing Key Laboratory of Mobile Communications Technology under Grant cquptmct-201902,in part by the Chongqing Science and Technology Commission under Grant cstc2019jcyjmsxmX0575,in part by the Program for Innovation Team Building at colleges and universities in Chongqing,China under Grant CXTDX201601006. APPENDIX A.Proof of Theorem 1In problemP1,allβk(k ∈K) are continuous real number variables.Hence,the objective function is a second-order derivable function.In particular,the second-order derivatives of the objective function(13)are given by According to the definitions of variables,it is straightforward to see that≥0,(?k,h ∈K).This implies that the Hessian matrix ofg(β) is a diagonal matrix and the elements on the diagonal are all non-negative.Consequently,the Hessian matrix is a semi-positive matrix andg(β) is a convex function.Besides,the constraints of problemP1are all linear.Thus,problemP1is a convex optimization problem.Accordingly,the optimal resource allocation strategy can be solved by using Karush-Kuhn-Tucker (KKT)conditions[37].We can conclude that wherehj(β)represents thej-th constraint.There are total 2K+1 constraints.By solving(36),we can obtain the optimal resource allocation strategy as Theorem 1. B.Proof of Lemma 1 First,let us define a function whereη=(η1,η2,···,ηK)(ηk ≥0,?k ∈K),K={1,2,···K}.The first-order derivatives ofL(η) are given by It is straightforward to see that the RHS of (39) is great than zero,since the inequality always holds.Hence,L(η) is a concave function.Note thatN(tk)=is a linear function.Based on vector composition principle [38],L(N(t1),N(t2),···,N(tK)) is a concave function.Besides,a nonnegative weighted sum of concave functions is concave.Therefore, is a concave function.

3.3 Optimal Computation Offloading

IV.SIMULATION RESULTS

4.1 Simulation Setup

4.2 Simulation Analysis

V.CONCLUSION

- China Communications的其它文章

- Energy-Efficient Full-Duplex UAV Relaying with Trajectory Optimization and Power Control in Maritime Communication Environments

- LDM-Satellite: A New Scheme for Packet Loss Classification over LEO Satellite Network

- Selective PNC in Satellite-Terrestrial Network over Non-Identical Fading Channels

- PS-G-GERT Effectiveness Evaluation Model of Link-Based GEO Satellite Communication Constellation under Poor Information Background

- A Resource-Based Pricing Collaborative Approach for Mitigating DDoS Attack in Mobile Edge Computing

- Unlicensed Band Sharing Based on Bargaining Game for Heterogeneous Networks