Construction of a convolutional neural network classifier developed by computed tomography images for pancreatic cancer diagnosis

Han Ma, Zhong-Xin Liu, Jing-Jing Zhang, Feng-Tian Wu, Cheng-Fu Xu, Zhe Shen, Chao-Hui Yu, You-Ming Li

Abstract

Key Words: Deep learning; Convolutional neural networks; Pancreatic cancer; Computed tomography

INTRODUCTION

Pancreatic ductal adenocarcinoma (PDAC) is the most common solid malignancy of the pancreas. It is aggressive and challenging to treat, which is more commonly called “pancreatic cancer”[1]. Pancreatic cancer is a highly lethal malignancy with a very poor prognosis[2]. Despite recent advances in surgical techniques, chemotherapy, and radiation therapy, the 5-year survival rate remains a dismal 8.7%[3]. Most patients with pancreatic cancer have nonspecific symptoms, and the disease is often found at an advanced stage. Only 10%-20% of patients present at the localized disease stage, at which complete surgical resection and chemotherapy offer the best chance of survival, with a 5-year survival rate of approximately 31.5%. The remaining 80%-90% of patients miss the chance to benefit from surgery because of general or local metastases at the time of diagnosis[4,5].

Currently, effective early diagnosis remains difficult, and it depends mainly on imaging modalities[6]. Compared with ultrasonography, magnetic resonance imaging (MRI), endoscopic ultrasonography, and positron emission tomography, computed tomography (CT) is the most commonly used imaging modality for the initial evaluation of suspected pancreatic cancer[7,8]. CT scans are also used for screening asymptomatic patients at high risk of developing pancreatic cancer. Patients with pancreatic cancer that were incidentally diagnosed during an imaging examination for an unrelated disease have a longer median survival time than those who are already symptomatic[9]. Sensitivity of CT for the pancreatic adenocarcinoma detection ranges from 70% to 90%[10]. The choice for pancreatic cancer diagnosis is a thin section with contrast-enhanced, dual-phase multidetector computed tomography[11].

Recently, due to promising achievements in deep neural networks and increasing medical needs, computer-aided diagnosis (CAD) systems have become a new research focus. There have been some initial successes in applying deep learning to assess radiological images. Deep learning-aided decision-making has been used in support of pulmonary nodule and skin tumor diagnoses[12,13]. Efforts should be made to develop CAD systems to distinguish pancreatic cancer from benign tissue due to the high morbidity of pancreatic cancer. Therefore, developing an advanced discrimination method for pancreatic cancer is necessary. A convolutional neural network (CNN) is a class of neural network models that can extract features from images by exploring the local spatial correlations presented in images. CNN models have been shown to be effective and powerful for addressing a variety of image classification problems[14].

In this study, we demonstrated that a deep learning method can achieve pathologically certified pancreatic ductal adenocarcinoma classification using clinical CT images.

MATERIALS AND METHODS

Data collection and preparation

Dataset: Between June 2017 and June 2018, patients with pathologically diagnosed pancreatic cancer in the First Affiliated Hospital, Zhejiang University School of Medicine, China, were eligible for inclusion in the present study. Patients with CTconfirmed normal pancreas were also randomly collected in the same period. All data were retrospectively obtained from patients’ medical records. Images of pancreatic cancers and normal pancreases were extracted from the database. All the cancer diagnoses were based on pathological examinations, either by pancreatic biopsy or by surgery (Figure 1). Participants gave informed consent to allow data collected from them to be published. Because of the retrospective study design, we verbally informed all the participants included in the study. Patients who do not want their information to be shared could opt out. Subject information was anonymized at the collection and analysis stage. All the methods were performed in accordance with the approved guidelines. The Hospital Ethics Committee approved the study protocol. A total of 343 patients were pathologically diagnosed with pancreatic cancer from June 2017 to June 2018. Of these patients, 222 underwent an abdominal enhanced-CT in our hospital before surgery or biopsy. We randomly collected 190 patients who underwent enhanced-CT with normal pancreas. Thus, among the 412 enrolled subjects, 222 were pathologically diagnosed with pancreatic cancer, and the remaining 190 diagnosed with normal pancreas were included as a control group.

Imaging techniques: Multiphasic CT was performed following a pancreas protocol and using a 256-channel multidetector row CT scanner (Siemens). The scanning protocol included unenhanced and contrast material–enhanced biphasic imaging in the arterial and venous phases after intravenous administration of 100 mL ioversol at a rate of 3 mL/sec using an automated power injector. Images were reconstructed at 5.0-mm thickness. For each CT scan, one to nine pictures of the pancreas were selected from each phase. Finally, datasets of 3494 CT images obtained from 222 patients with pathologically confirmed pancreatic cancer and 3751 CT images from 190 patients with normal pancreas were collected.

Deep learning technique

Figure 1 Examples of dataset.

Data preprocessing: We adopted a CNN model to classify the CT images. A CNN requires the input images to be the same size. Thus, we first cropped each CT image starting at the center to transform it into a fixed 512 × 512 resolution. Each image was stored in the RGB color model, which is a model with red, green, and blue light merged together to reproduce multiple colors, and thus consisted of three-color channels (i.e., red, green, and blue). We normalized each channel of every image using 0.5 as the mean and the standard deviation. This normalization was performed because all the images were processed by the same CNN, and the results might improve if the feature values of the images were scaled to a similar range.

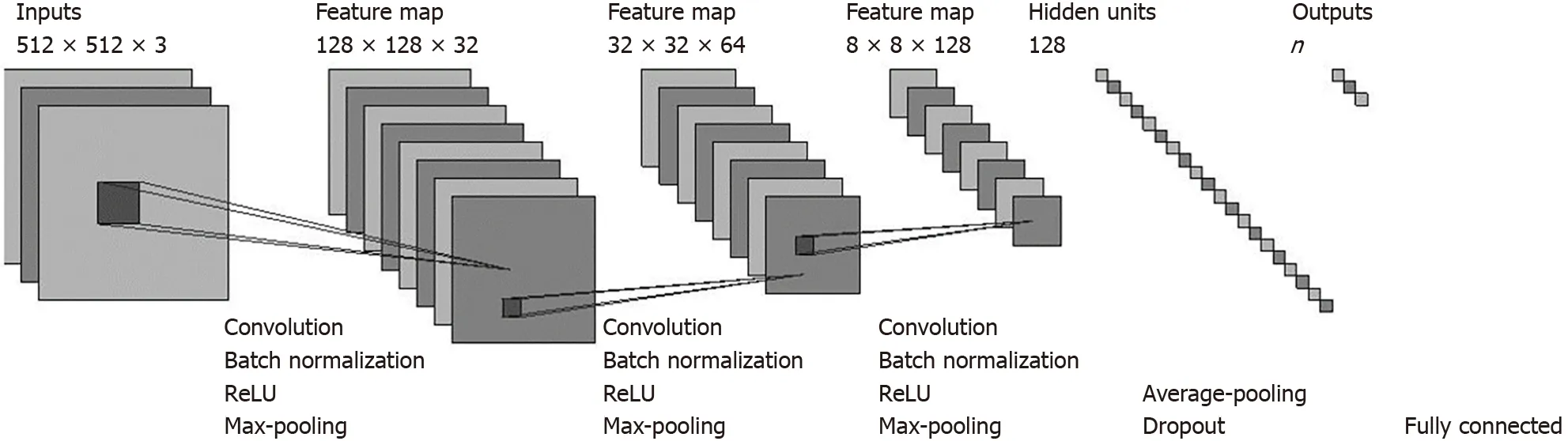

CNN:In this work, we designed a CNN model to classify the pancreatic CT images to assist in pancreatic cancer diagnosis. The architecture of our proposed CNN model is presented in Figure 2. Our model consisted primarily of three convolutional layers and a fully connected layer. Each convolutional layer was followed by a batch normalization (BN) layer that normalized the outputs of the convolutional layer, a rectified linear unit (ReLU) layer that applied an activation function to its input values, and a max-pooling layer that conducted a down-sampling operation. We also adopted an average-pooling layer before the fully connected layer to reduce the dimensions of the feature values input to the fully connected layer. Following the work by Srivastavaet al[15], a dropout rate of 0.5 was used between the average-pooling layer and the fully connected layer to avoid overfitting and increase the performance. We also tried Spatial Dropout[16]between each max-pooling layer and its following convolutional layer, but found that such dropouts resulted in performance degradation. Therefore, we did not apply Spatial Dropout. As input, the network takes the pixel values of a CT image, and it outputs the probability that the image belongs to a certain class (e.g., the probability that the corresponding patient has pancreatic cancer). The CT images were fed into our model layer by layer. The input to each layer is the output values of the previous layer. The layers perform specific transformations on the input values and then pass the processed values to the next layer.

Figure 2 Architecture of our convolutional neural network model.

The convolutional layers and the pooling layers require several hyper-parameters whose settings are shown in Supplementary Material. In the Supplementary Material, we also discuss these layers in sequence: First, the convolutional layer, then, the batch normalization (BN) layer, the Rectified Linear Unit (ReLU) layer, the max-pooling, and average-pooling layers, and finally, the fully connected layer. Then, we present the hyper-parameter settings for our model.

Training and testing the CNN: We collected three types of CT images: Plain scan, venous phase, and arterial phase and built three datasets from the collected images based on the image types. Each dataset may include several images collected from one patient. To divide a dataset into training, validation, and test sets, we first collected the identity documents (IDs) of all the patients in the dataset. Each patient was labeled as follows: The label may be “no cancer (NC)”, “with cancer at the tail and/or body of the pancreas (TC)” or “with cancer at the head and/or neck of the pancreas (HC)”. For each label,e.g., “no cancer”, we randomly placed 10% of the patients with this label into the validation set, 10% into the test set, and the remaining 80% into the training set. Notablly, images of the same patient appear in only one set.

All patients and their CT images were marked by one of the three labels,i.e., “no cancer”, “with cancer in the tail of pancreas” and “with cancer in the head of the pancreas”. For each dataset, we could treat the TC and HC patients as “with cancer (CA)”. Then, we trained a binary classifier to classify all the CT images. We also trained a ternary classifier to determine the specific cancer location. Our proposed approach was flexible enough to be used as either a binary classifier or a multiple-class classifier; we needed only to specify the hyperparameter of the fully-connected layer to control the classifier type.

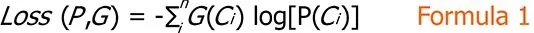

Given a dataset and the number of target classes (denoted asn), we trained our model on the training set and set the mini-batch size to 32. After each training iteration, we used the cross-entropy loss function to calculate the loss between the predicted results (i.e., the probability distributionPoutput by the fully connected layer) of our model and the ground truth (denoted asG), computed as Formula 1.

This loss was used to guide the updates of the weights in our CNN model; we used Adam as the optimizer. The statistics of each dataset are presented in Table 1.

After updating the model, we calculated the accuracy (see section Evaluation below) of the new model on the validation set to assess the quality of the current model. We trained our model for a maximum of 100 epochs, and the model with the highest accuracy on the validation set was selected as the final model. A 10-fold crossvalidation process was used to evaluate our techniques. We randomly divided the images in each phase into 10 folds, 8 of which were used to do the training, 1 fold was the validation set, and the remaining one was used to test the model. The entire process was repeated 10 times, and each fold will be used as the test set once. The average performance was recorded. We evaluated the effectiveness of our CNN model on the test sets in terms of accuracy, precision, and recall (see section Evaluationbelow).

Table 1 Statistics of our datasets

Evaluation: We evaluated our approach on the three datasets in terms of both binary and ternary classifications and measured the effectiveness of our approach relying on widely adopted metrics of classification aspects: Accuracy, precision, and recall. Accuracy is the proportion of the images that are correctly classified (denoted asTP) among all the images (denoted asAll) for all classes. The precision for classCiis the proportion of images that are correctly classified as classCi(denoted asTPi) among all images that are classified as class (denoted asTPi+FPi). The recall for classCiis the proportion of images that are correctly classified as classCi(denoted asTPi) among all the images that actually belong to classCi(denoted asAlli). These metrics are calculated as follows:

We evaluated our approach relying on the accuracy because it measures the overall quality of a classifier on all classes instead of only a specific classCi, which is shown as follows:

Sensitivity = Recall in cancer detection = (The correctly predicted malignant lesions)/(All the malignant lesions);

Specificity = Recall in detecting noncancer = (The correctly predicted nonmalignant cases)/(All non-malignant cases);

Precision in cancer detection = (The correctly predicted malignant lesions)/(All images classified as malignant).

Evaluation between deep learning and gastroenterologists

Ten board-certified gastroenterologists and 15 trainees participated in the study, and the accuracy of their image classifications was compared with the predictions of the deep learning technique. Each gastroenterologist or trainee classified the same 100-image set in plain scan randomly selected from the test dataset of the deep learning technique. The human response time was approximately 10 s per image. The images accurately classified by the board-certified gastroenterologists and trainees were compared with the results of the deep learning model.

Statistical analysis

We performed statistical analyses using SPSS 13.0 for Windows (SPSS, Chicago, IL, United States). Continuous variables are expressed as mean ± SD and were compared using Student’st-test. Theχ2test was used to compare categorical variables. A value ofP< 0.05 (2-tailed test) was considered statistically significant.

RESULTS

Characteristics of the study participants

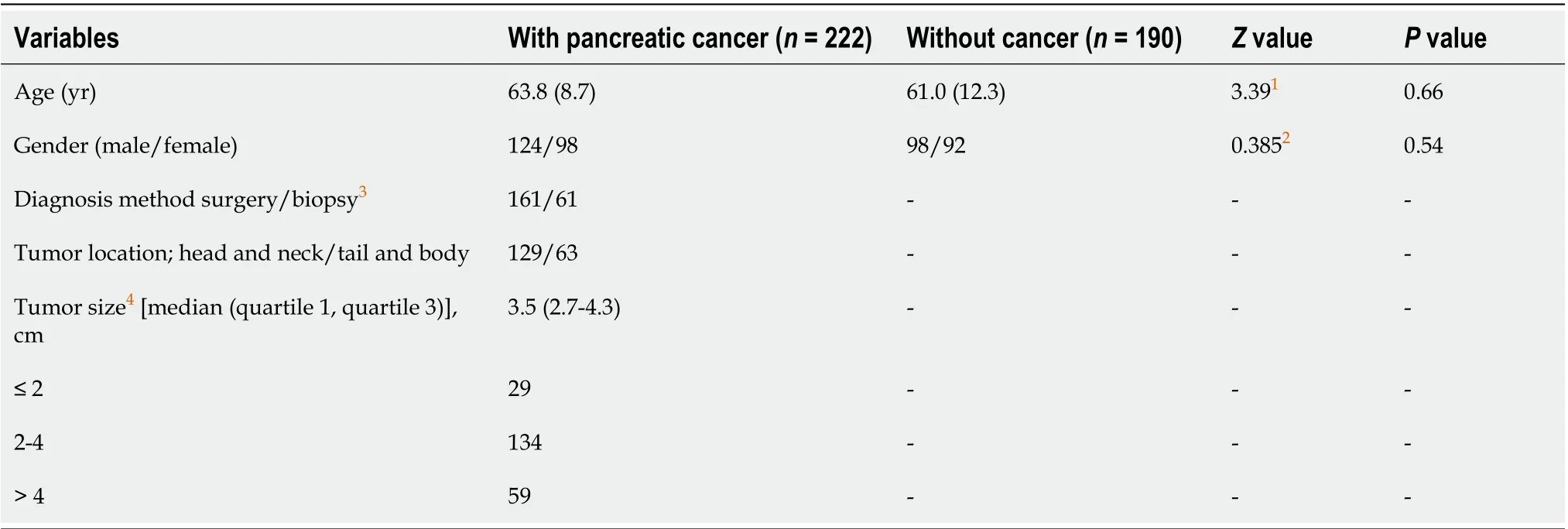

Among the 412 enrolled subjects, 222 were pathologically diagnosed with pancreatic cancer, and 190 diagnosed with normal pancreas were included as a control group. The characteristics of the enrolled participants, classified by the presence or absence of pancreatic cancer, are shown in Table 2. The mean age was 63.8 ± 8.7 years for cancer group (range, 39-86 years, 124 men/98 women) and 61.0 ± 12.3 years for non-cancer group (range, 35-83 years, 98 men/92 women). These two groups had no significant differences in age or gender (P> 0.05). For the cancer group, 129 cases were located at the head and neck of pancreas, 93 cases at the tail and body of pancreas. The median tumor size of cancer group was 3.5 cm (range, 2.7-4.3 cm).

Performance of the deep convolutional neural network used as a binary classifier

Datasets of 3494 CT images obtained from 222 patients with pathologically confirmed pancreatic cancer and 3751 CT images from 190 patients with normal pancreas were included, statistics of each dataset are presented in Table 1. We labeled each CT image as “with cancer” or “no cancer”. Then, we constructed a binary classifier using our CNN model by 10-fold cross validation on 2094, 2592, and 2559 images in the plain scan, arterial phase, and venous phase, respectively (Table 1).

The overall diagnostic accuracy of the CNN was 95.47%, 95.76%, and 95.15% on the plain scan, arterial phase, and venous phase, respectively. The sensitivity of the CNN (known as recall in cancer detection - the correctly predicted malignant lesions divided by all the malignant lesions) was 91.58%, 94.08%, and 92.28% on the plain scan, arterial phase, and venous phase images, respectively. The specificity of the CNN (known as recall in detecting non-cancer - the correctly predicted nonmalignant cases divided by all nonmalignant cases) was 98.27%, 97.57% and 97.87% on the three phases, respectively. The results are summarized in Table 3.

The difference in accuracy, specificity and sensitivity among the three phases were not significant (χ2= 0.346,P= 0.841;χ2= 0.149,P= 0.928;χ2= 0.914,P= 0.633; respectively). Sensitivity of the model is considerably more important than its specificity and accuracy, because the purpose of a CT scan is cancer detection. Compared with arterial and venous phase, plain phase had same sensitivity, easier access, and lower radiation. Thus, these results indicated that the plain scan alone might be sufficient for the binary classifier.

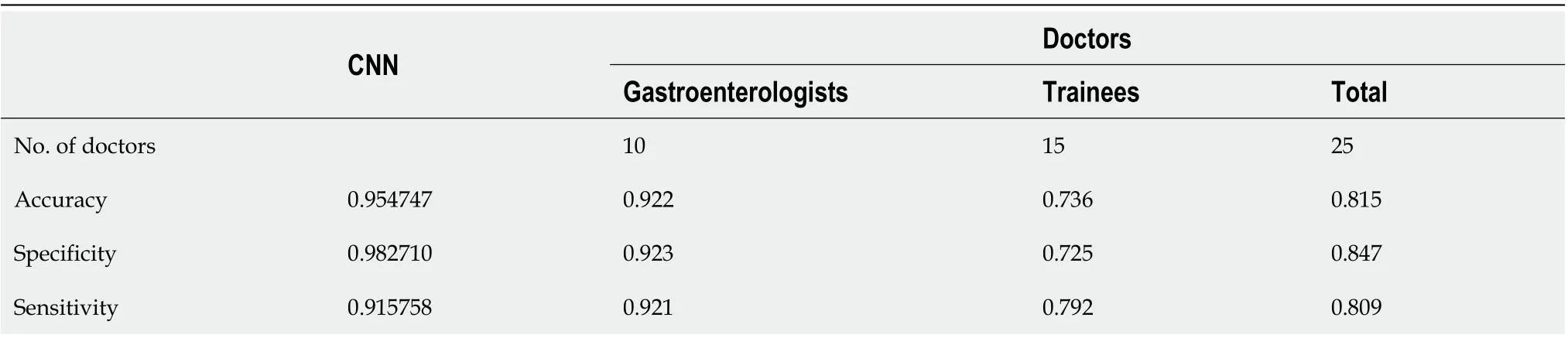

Comparison between CNN and gastroenterologists for the binary classification

Table 4 shows the results of the image evaluation of the test data by ten board-certified gastroenterologists and 15 trainees. The accuracy, sensitivity, and specificity in the plain phase were 81.0%, 84.4%, and 80.4%, respectively. The gastroenterologist group was found to have significantly higher accuracy (92.2%vs73.6%,P< 0.05), specificity (92.1%vs79.2%,P< 0.05), and sensitivity (92.3%vs72.5%,P< 0.001) than trainees.

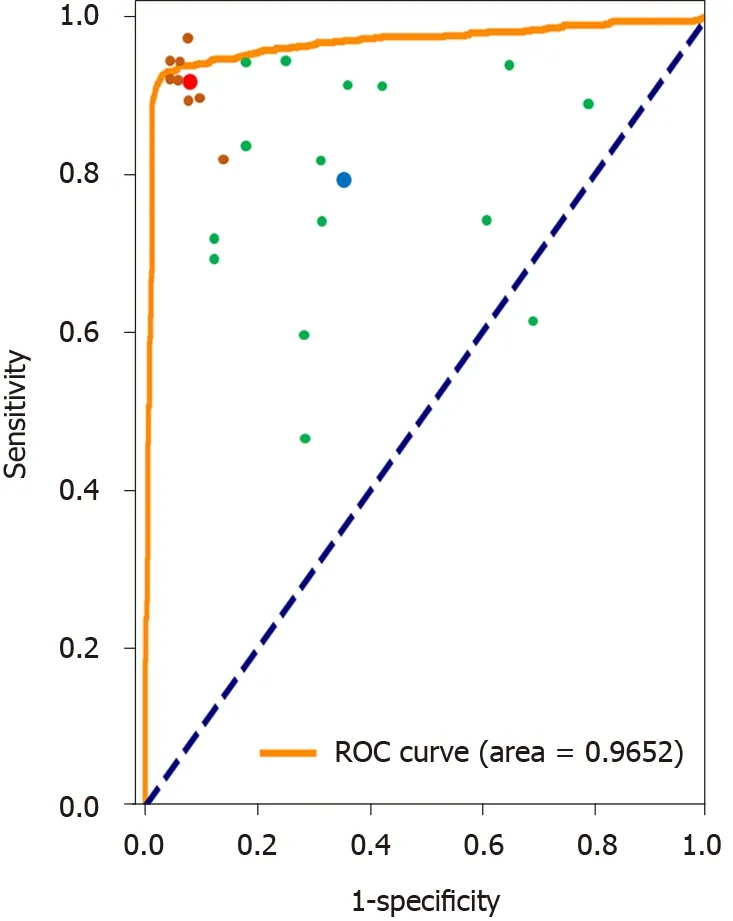

As described in the methods section, ten board-certified gastroenterologists and 15 trainees participated in the study, and their image classification accuracy was compared with that of the deep learning technique as a binary classifier. The accuracy of the gastroenterologists, trainees, and the CNN was 92.20%, 73.60%, and 95.47%, respectively. The accuracy by CNN and board-certified gastroenterologists achieved higher accuracies than trainees (χ2= 21.534,P< 0.001;χ2= 9.524,P< 0.05; respectively). However, the difference between CNN and gastroenterologists was not significant (χ2= 0.759,P= 0.384). Figure 3 demonstrates the receiver operating characteristic (ROC) curves for the binary classification of the plain scan.

Performance of the deep convolutional neural network as a ternary classifier

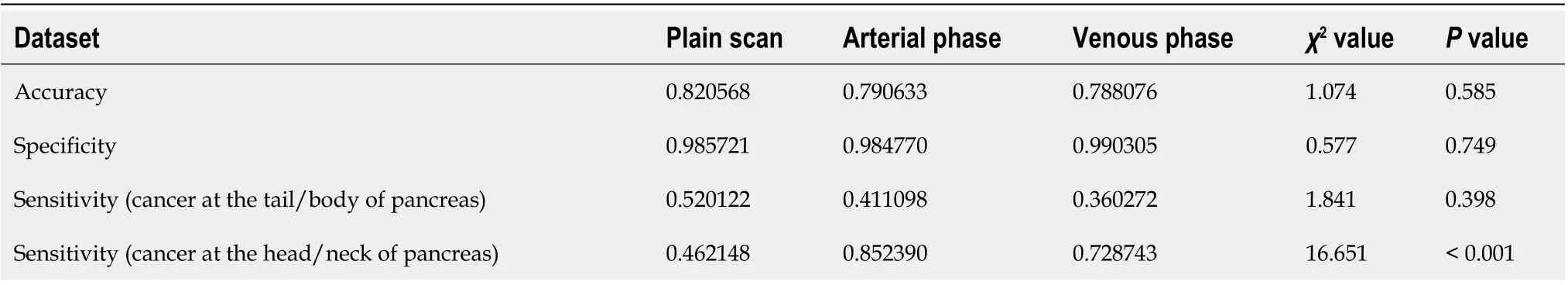

We also trained a ternary classifier using our CNN model and evaluated it by 10-flod cross validation (Table 1). The overall diagnostic accuracy of the ternary classifier CNN was 82.06%, 79.06%, and 78.80% on the plain scan, arterial phase, and venous phase, respectively. The sensitivity scores for detecting cancers in the tail of pancreas were 52.51%, 41.10% and 36.03% on the three phases. The sensitivity scores for detecting cancers in the pancreas head were 46.21%, 85.24%and 72.87% on the three phases, respectively.

The difference in accuracy and specificity among the three phases was not significant (χ2= 1.074,P= 0.585;χ2= 0.577,P= 0.749). The difference in the sensitivity scores of cancers in the pancreas head among the three phases was significant (χ2= 16.651,P< 0.001), with the arterial phase having the highest sensitivity. However, difference in sensitivity in cancers in the pancreas tail among the three phases was not significant (χ2= 1.841,P= 0.398). The results are summarized in Table 5.

Table 2 Characteristics of study participants

Table 3 Performance of the binary classifiers

Table 4 Diagnostic accuracy of the binary classifiers in plain scan: Convolutional neural network vs gastroenterologists and trainees

DISCUSSION

In this study, we developed an efficient pancreatic ductal adenocarcinoma classifier using a CNN trained on medium-sized datasets of CT images. We evaluated our approach on the datasets in terms of both binary and ternary classifications, with the purposes of detecting and localizing masses. In the binary classifiers, the performance of plain, arterial and venous phase had no difference, its accuracy on plain scan was 95.47%, sensitivity 91.58%, and specificity 98.27%. In the ternary classifier, the arterial phase had the highest sensitivity in detecting cancer in the head of the pancreas among three phases, but it achieved only moderate performances.

Artificial intelligence has made great strides in bridging the gap between human and machine capabilities. Among the available deep learning architecture, the CNN is the most commonly applied algorithm for analyzing visual images; it can receive an input image, assign weights to various aspects of the image and distinguish one type of image content from another[17]. A CNN includes input, an output layer, and multiplehidden layers. The hidden CNN layers typically consist of convolutional layers, a BN layer, a ReLU layer, pooling layers, and fully connected layers[14]. The CNN acts like a black box, and it can make judgments independent of prior experience or the human effort involved in creating manual features, which is a major advantage. Previous studies showed that CT had a sensitivity of 76%-92%, and an accuracy of 85%-95% for diagnosing pancreatic cancer according to the ability of doctors[18,19]. Our results indicate that our computer-aided diagnostic systems have same detection performance.

Table 5 Performance of the ternary classifiers

Figure 3 Receiver operating characteristic curves and AUC values for the binary classification of the plain scan using the convolutional neural network model. Each trainee’s prediction is represented by a single green point. The blue point is the average prediction of them. Each gastroenterologist’s prediction is represented by a single brown point. The red point is the average prediction of them. ROC: Receiver operating characteristic.

The primary goal for a CNN classifier is to detect pancreatic cancer effectively, thus, the model needs to consider sensitivity as a priority over specificity. In the constructed binary classifier, all three phases had high levels of accuracy and sensitivity, with no significant differences among the three phases. This indicates the potential ability of plain scan in tumor screening. Relatively same performance of sensitivity on plain phase can be explained by the size of tumor in our study and redundant information given by arterial or venous phase. In the current study, most tumors were larger than two centimeters, allowing plain scan easier to assess tumor morphology and size. In addition, there are less noisy and unrelated information in the images of the plain scan phase. Thus, it is relatively easy for our CNN model to distill pancreatic-cancer-related features from such images. Currently, the accuracy of the binary classifier on plain scan was 95.47%, its sensitivity 91.58%, and its specificity 98.27%. When compared with the judgments of gastroenterologists and trainees on the plain phase, the CNN model achieved good performance. The accuracy of the CNN and board-certified gastroenterologists was higher than that of the trainees; however, the difference between CNN and gastroenterologists was not significant. We executed our model using a Nvidia GeForce GTX 1080 GPU when performing classifications; its response time was approximately 0.02 seconds per image. Compared with the 10 s average reaction time required by physicians, although our CNN model cannot stably outperform gastroenterologists, the CNN model can process images much faster and is less prone to fatigue. Thus, binary classifiers might be suitable for screening purposes in pancreatic cancer detection.

In our ternary classifier, the accuracy differences among the three phases were also not significant. Regarding sensitivity, the arterial phase had the highest sensitivity in finding malignant lesions among all malignancies in the pancreas head. As the typical appearance of an exocrine pancreatic cancer in CT is a hypoattenuating mass within the pancreas[20], the complex vascular structure around the head and neck of the pancreas could be an explanation for the better performance of CNN classifier in detecting pancreas head and neck lesions in the arterial phase. It is worth noting that unopacified superior mesenteric vein (SMV) at arterial phase may cause confusion in tumor detection. However, SMV has a relatively fixed position in CT image, accompanied by the superior mesenteric artery, which may help the classifier distinguish it from tumor. Further studies in pancreatic segmentation should be carried out to solve this problem. The reason why we also tested a ternary classification is that surgeons choose the surgical approach based on the location of the mass in the pancreas. The conventional operation for pancreatic cancer of the head or uncinate process is pancreaticoduodenectomy. Surgical resection of cancers located in the body or tail of the pancreas involves a distal subtotal pancreatectomy, usually combined with a splenectomy. Compared with gastroenterologists, the performance of the ternary classifier was not as good, because when the physicians judged that a mass existed, they also knew the location of the mass.

Many CNN applications to evaluate organs have been reported, includingHelicobacter pyloriinfection, skin tumors, liver fibrosis, colon polyps, and lung nodules[12,13,21-23], as well as applications for segmenting prostates, kidney tumors, brain tumors, and livers[24-27]. A CNN also has potential applications for pancreatic cancer, mainly focusing on pancreas segmentation by CT[28,29]. Our work concentrates on the detection of pancreatic cancer, and the results demonstrated that on a medium-sized dataset, an affordable CNN model can achieve comparable performance on pancreatic cancer diagnosis and can be helpful as an assistant of the doctors. Another interesting work by Liuet al[30], adopted the faster R-CNN model, which is more complex and harder to train and tune, for pancreatic cancer diagnosis. Their model was mixed images with different phases with an AUC 0.9632, while we trained three classifiers for the plain scan, arterial phase, and venous phase, respectively. Our results indicate that the plain scan, which has easier access and lower radiation, is sufficient for the binary classifier, with an AUC 0.9653.

Our study has several limitations. First, we used only pancreatic cancer and normal pancreas images in this study; thus, our model was not tested with images showing inflammatory conditions of the pancreas, nor was it trained to assess vascular invasion, metastatic lesions and other neoplastic lesions,e.g., intraductal papillary mucinous neoplasm. In the future, we will investigate the performance of our deep learning models on detecting these diseases. Second, our dataset was created using a database with pancreatic cancer/normal pancreas ratio of approximately 1:1; thus, the risk of malignancy in our study cohort was much higher than the normal real-world rate, which made model calculations easier. Therefore, distribution bias might have influenced the entire study, and further studies are needed to clarify this issue. A third limitation is that although the binary classifier achieved the same accuracy as the gastroenterologists, the classifications were based on the information obtained from a single image. We speculate that if the physicians were given additional information, such as the clinical course or dynamic CT images, their classification of the condition would be more accurate. Further studies are needed to clarify this issue.

CONCLUSION

We developed a deep learning-based, computer-aided pancreatic ductal adenocarcinoma classifier trained on medium-sized CT images. The binary classifier may be suitable for disease detection in general medical practice. The ternary classifier could be adopted to localize the mass, with moderate performance. Further improvement in the performance of models would be required before it could be integrated into a clinical strategy.

ARTICLE HIGHLIGHTS

ACKNOWLEDGEMENTS

We would like to thank all the participants and physicians who contributed to the study.

World Journal of Gastroenterology2020年34期

World Journal of Gastroenterology2020年34期

- World Journal of Gastroenterology的其它文章

- Peliosis hepatis complicated by portal hypertension following renal transplantation

- Endoscopy-based Kyoto classification score of gastritis related to pathological topography of neutrophil activity

- Golgi protein-73: A biomarker for assessing cirrhosis and prognosis of liver disease patients

- Arachidyl amido cholanoic acid improves liver glucose and lipid homeostasis in nonalcoholic steatohepatitis via AMPK and mTOR regulation

- Potential applications of artificial intelligence in colorectal polyps and cancer: Recent advances and prospects

- Transjugular intrahepatic portosystemic shunt for Budd-Chiari syndrome: A comprehensive review